Mosquito Egg Computer Vision

This article was first published on Abt Associates’ website, part I, with parts added from this research poster presentation and this talk. The code for this project is on GitHub, alongside a demo of the app.

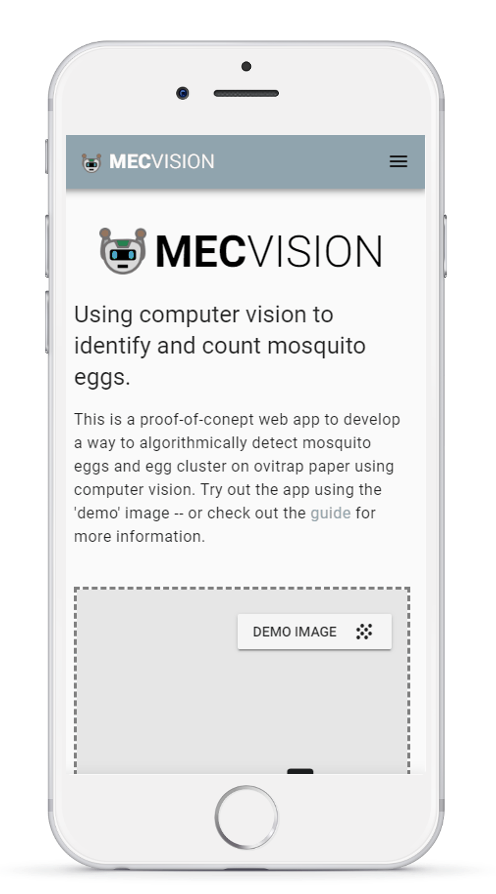

Mosquito Egg Computer Vision, or MECVision (GitHub), is a web app that uses computer vision (object detection) on a smartphone to analyze a photo of mosquito egg trap paper and instantly estimate the number of mosquito eggs on that paper (which look like very tiny black dots.)

This process is currently done mostly by-hand around the world, and can unsurprisingly be very time-consuming and susceptible to human error. Pattern recognition via object detection to the rescue!

This project was part of USAID’s Zika AIRS Project that aimed to reduce the burden of vector-borne diseases, including Zika, through robust mosquito control activities, and has been open-sourced to allow others to contribute and build on the source code.

This web app comes in at under 10MB and can work on any desktop web browser or smartphone. Additionally, we use popular software libraries, with obvious algorithmic inputs, so that others could replicate these numbers in the laboratory or modify it for greater customization and precision. It combines good design, a small footprint, an eye towards usability, and modern, low-cost web frameworks for a tool that is simple, cheap, and easily handed off to local governments and programmers.

As a data scientist and project lead for AbtX, (Abt Associates’ internal research and development lab), I have the privilege and challenge of connecting our field staff working at the last-mile, with our subject-matter experts, and with our engineers to produce real products for impacts. It’s an awesome responsibility at times — particularly when tackling something as important as this.

This project and the resulting web app has been some of the most challenging and interesting work I’ve done at Abt Associates, and has allowed me to shift from digital product development to the world of image processing and machine learning — it’s been a wild ride.

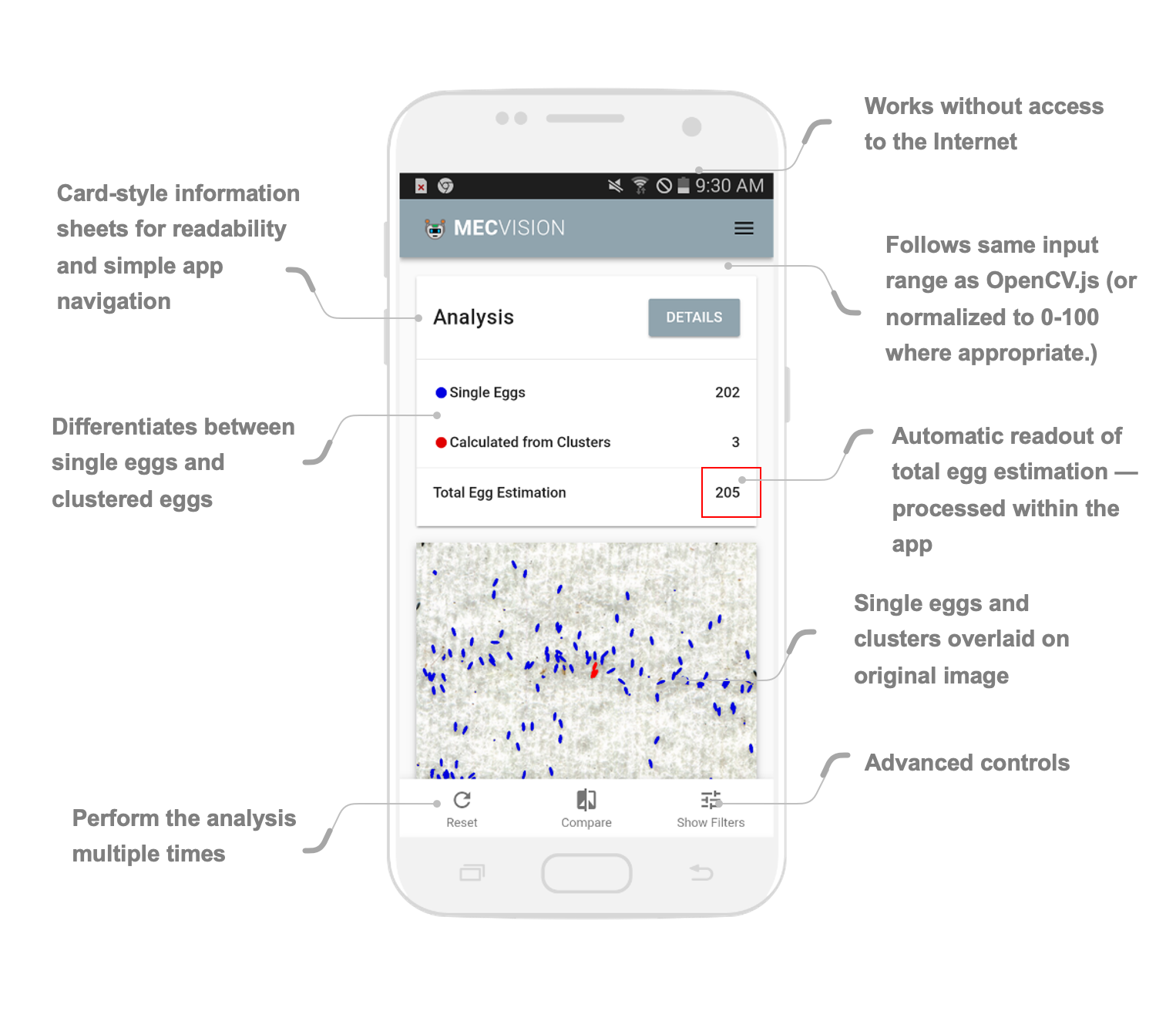

The MECVision prototype on a mobile device, counting single and clusters of eggs.

The MECVision prototype on a mobile device, counting single and clusters of eggs.

Background

Why We Count Mosquito Eggs

It’s hard to overstate the global burden that mosquito-borne diseases have on society. The contact between mosquitoes Aedes aegypti and humans facilitates the transmission of infectious diseases such as Zika, dengue fever and chikungunya.

To eliminate or reduce the spread of these diseases, we have to collect information about the mosquito populations involved in their transmission and determine their distribution, abundance and breeding habits, among other facts.

Just one of the ways that we gather data about mosquitoes in an environment is to track them at the last mile – literally down to where individual mosquitoes lay their eggs. We track changes over time.

An ovitrap held by a health worker. In this photo, the health officer is about to set the trap in a residence.

An ovitrap held by a health worker. In this photo, the health officer is about to set the trap in a residence.

The Challenge of Counting

Local health workers or field technicians set traps (called ovitraps — in this case paper ovitraps) in standing water containers. The traps attract female mosquitoes that lay their eggs on the inner surfaces of the trap. The eggs then stick to the paper on the side. The technicians return to these individual sites a week later to collect the papers and count the eggs that are exposed on the paper.

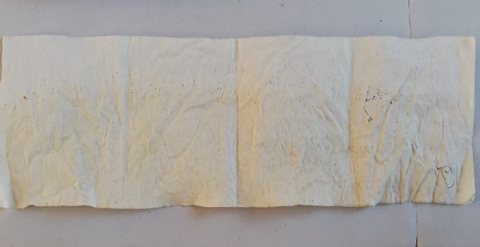

Given the small size of mosquitoes themselves, you can just barely see the eggs with the naked eye. They look like tiny grains of ground coffee. While the typical mosquito can lay around 100-200 eggs at a time, you can have many hundreds of such “grains” on a given sheet of ovitrap paper, which is about the size of a standard napkin.

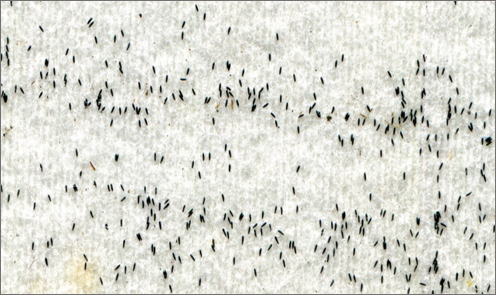

Paper towel with mosquito eggs as seen with the naked eye.

Paper towel with mosquito eggs as seen with the naked eye.

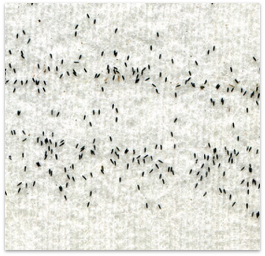

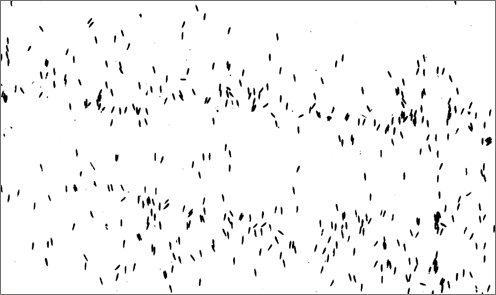

Eggs under a 20x stereoscope lens.

Eggs under a 20x stereoscope lens.

As it turns out, counting mosquito eggs by hand is tedious, eye-straining, error-prone, inconsistent and time-consuming work. It’s one of those tasks that once you start doing it manually, your “there-has-to-be-another-way-to-do-this” voice kicks in.

When health workers are conducting surveillance of only a few ovitraps deployed in the field, the process of manually counting the eggs of each trap might be viable. However, when mosquito surveillance is a wide-scale initiative, and the number of houses under monitoring is a hundred or more, you need an enormous number of workers. Our team decided we needed a faster tool and developed it with the help of our colleagues at Abt Associates.

Our team began looking into ways to use computer vision — that is, digital object recognition – to process an image of these papers with the eggs on them, identify the objects that are eggs and output the number of eggs that it “sees”. We needed something that would be easily accessible, easy to use and available on a smartphone or laptop with little or no internet connectivity.

This might be a challenge.

Building a Proof of Concept

Before diving right into investing in a solution and piloting a project, we had to see if this would even be a feasible product. There’s been a lot of recent progress with object detection, with computer vision, and with smartphone cameras over the years — but we didn’t know if they would all come together into something that we could actually use.

Proof of Concept app with Vue.js and Bulma

Proof of Concept app with Vue.js and Bulma

What’s more, we suspected that there might be something like this already on the market. We did our homework, and while there were some examples, including this very sophisticated iCount software, it didn’t quite match the use case that we were hearing from the field where they needed something portable and simple.

Based on our constraints and what tools we had available, we opted to develop a proof of concept as a progressive web app and do all of the analysis within the browser. We wanted to keep things simple, accessible, and easily modifiable by ourselves or anyone who wanted to fork and update the source code to match their particular environment (within a country’s Ministry of Health, for example.)

Proof of Concept to the Field

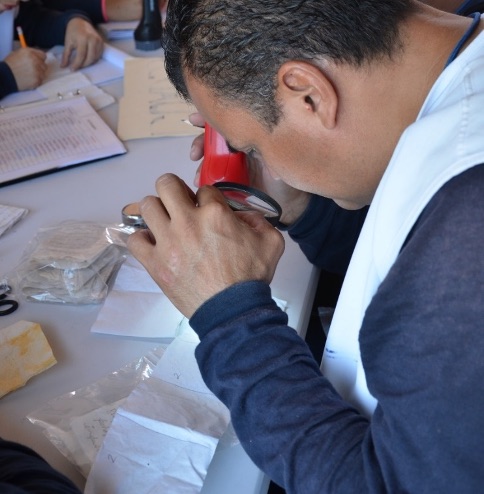

Conducting research field visit to better understand the process of counting mosquito eggs. Field work in Jamaica.

Conducting research field visit to better understand the process of counting mosquito eggs. Field work in Jamaica.

Field Research

Our approach of having a proof-of-concept on-hand but knowing that we would be rebuilding it entirely from the ground up enabled us to flip the traditional model of software development on its head. We didn’t develop an app for a group and ask how we did. Instead we enlisted the experts for their experience and insight to co-design and co-develop alongside us. This ensured that we converged on a useful solution and built in immediate feedback loops to avoid dead ends.

The first major challenge we had in the field was taking into account the nuances and differences of the counting process. We learned that field technicians and lab experts look for black dots on white paper. Sometimes they use magnifying glasses, microscopes or stereoscopes. Most of the time it’s just counting by hand and maybe with a handheld clicker to help the tally.

Testing the web-based prototype with assumptions about the process; collecting feedback. Mosquito Control and Research Unit, Kingston, Jamaica.

Testing the web-based prototype with assumptions about the process; collecting feedback. Mosquito Control and Research Unit, Kingston, Jamaica.

Why a Progressive Web App

A Progressive Web App is a set of practices that nudge a web app to behave like a native iOS or Android app — so you can put it on your home screen alongside other apps, and run it from either a phone or desktop browser across devices.

And because it’s a “progressive” app, the software to run the site and analysis is loaded into the website when you access the page for the first time using service workers, meaning that it works even when completely offline — including the analysis and egg counting. Nothing pings back to a server.

Finally, the app and underlying technologies all rely on open-source technologies and libraries, meaning that the source code itself can be updated and tailored to other use cases.

Building the App

Based on what we learned from building the proof of concept, and then incorporating all of the feedback from the field research, early users, interviews, and more, we got to work immediately.

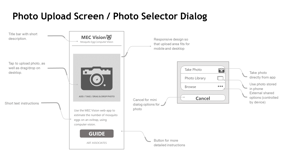

Wireframes

We developed consistent wireframes to help with our communication both as we were building the app, but also for ourselves as a team in understanding the process, what was in scope, how the technical walkthrough was meant to occur, and more.

Initial wireframe designs for what the app would do as it takes a picture (or selects one from the device), analyzes the image, and predicts the number of eggs.

Initial wireframe designs for what the app would do as it takes a picture (or selects one from the device), analyzes the image, and predicts the number of eggs.

From there, we spent the next few months working in iterative sprint cycles of development — building out certain features, plugging in to frameworks like Vue.js to handle the front-end interaction (as well as most of the logic which was being done in JavaScript), and Vuetify for the material design framework so that we weren’t building the interface from scratch.

Using the App

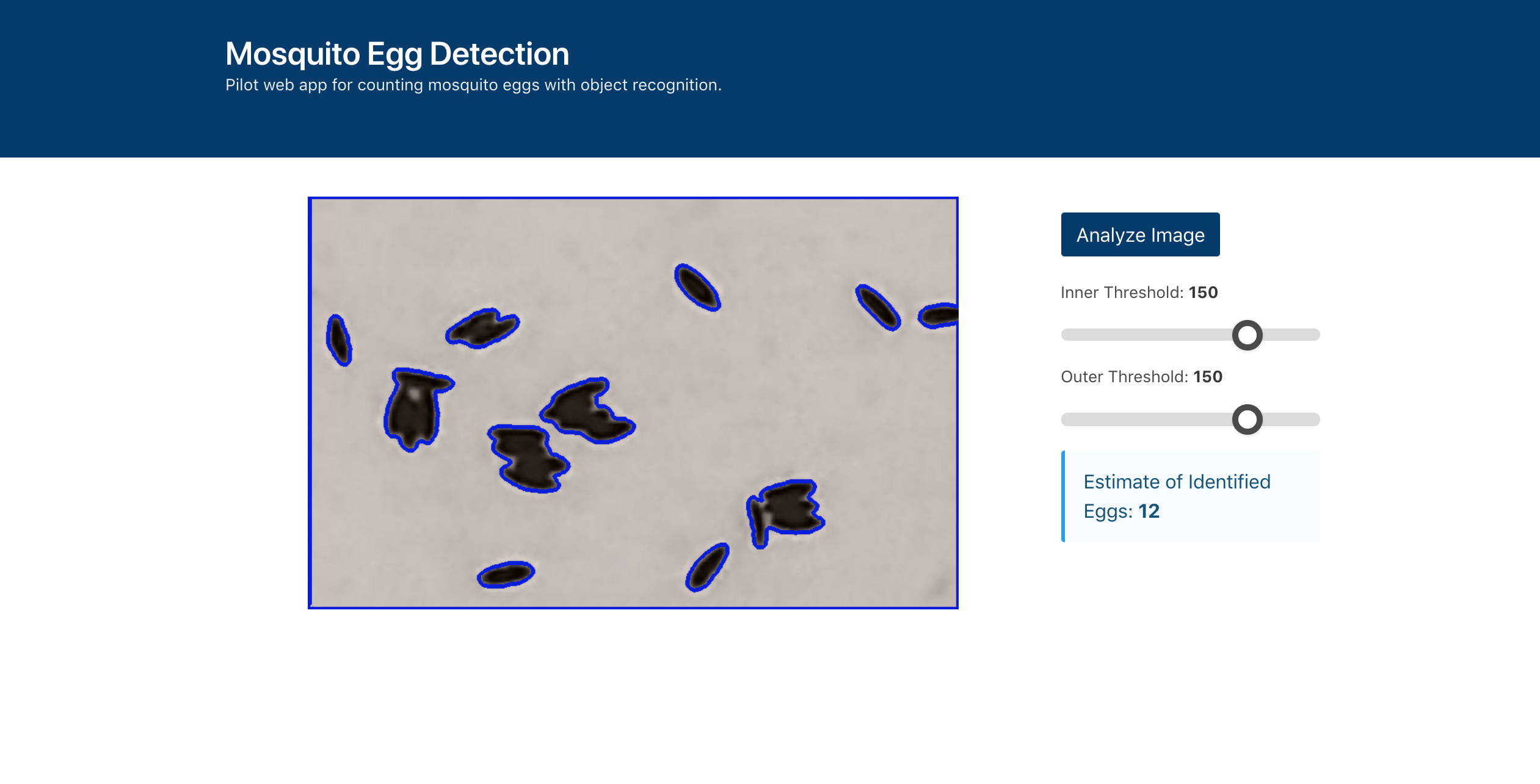

Desktop version of MECVision processing image through its stages of analysis.

Desktop version of MECVision processing image through its stages of analysis.

The process itself is fairly straightforward — the app works essentially by loading an image, adjusting it as necessary, processing the image, reviewing the results, and then tweaking the parameters if necessary and re-running the calculations.

1. Load an Image

When the app (or website) is loaded onto a device’s web-browser, it loads all of the app code it needs to operate so that no information needs to be sent or received from a remote server or database – everything is happening on the device to which it is loaded.

After the app loads, a user can either take a picture, or upload one from their device (or use the ‘demo’ pictures in memory to see it work).

2. Adjust the Image

Once selected, the user then adjusts the image (rotation, resizing, and cropping) by zooming in and out using ‘pinch-and-zoom’ on a phone or with the scroll function on a desktop.

The user then selects the ‘type’ of image to analyze, ensuring more precise default values for the analysis depending on the type of picture, (Current templates are: a classic ovitrap paper, a magnified, and a micro-scoped image. These just help to present default values, and the advanced controls are available after the initial analysis.

3. Analyze the Image

Once the image has been loaded and adjusted, the user presses the button to ‘analyze’ the image.

At this point, the app uses software — mostly the open-source library of opencv.js, with input controls defined by the user interface of the app.

The app combines several tools within the OpenCV.js library to analyze the image, step-by-step, that will allow it to eventually count the number of mosquito eggs in the image.

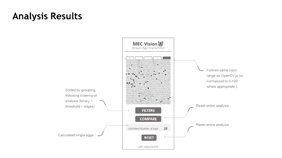

4. Review the Analysis

When the analysis is complete, the total egg estimation will be displayed. The user can also see a disaggregation of single egg counts versus clustered egg counts, as well as additional ‘details’ in the pop-up button. Additionally, the user can ‘compare’ the output at each stage of the algorithm process by pressing the ‘compare’ button, along with toggling the left-right arrows to review the algorithm processes in order.

5. Advanced Algorithm Controls

Depending on the process and image used, it might be necessary to adjust the filters and threshold value. This can be done by selecting the ‘show filters’ button and reviewing the various settings, including ways to adjust the threshold value (where items become black or white), and the single egg minimum and maximum values, as well as maximum cluster size (to filter out large foreign objects like insects or dirt). When a value is changed here, the algorithm is updated automatically (without the animation) to reflect the new values.

Desktop version of MECVision processing image through its stages of analysis.

Desktop version of MECVision processing image through its stages of analysis.

Technical Walkthrough

For MECVision, we use several algorithmic procedures tied together, in order to isolate the mosquito egg items and count them separately.

The app uses a library called OpenCV.js (Open Computer Vision) to process the image in steps to highlight contrast, detect edges, find contours of objects within an image, and to make calculations based on that analysis.

For MECVision, we use several algorithmic procedures tied together, in order to isolate the mosquito egg items and count them separately.

1. Original Image

The quality of the starting image cannot be over-stated. It’s necessary to be able to distinguish individual eggs from the egg paper, for there not to be too many discolorations or debris that could be mistaken for mosquito eggs, and for the eggs themselves to be mostly flat and not stacked in vertical rafts. The rule of thumb is that if it’s difficult for the human eye to see, it will be difficult for the algorithm to decipher as well.

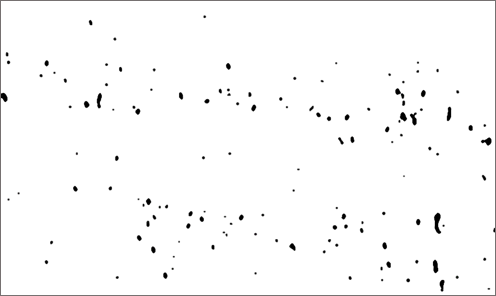

2. Threshold Value

This step converts the image to binary black and white values — removing the background and highlighting the foreground eggs, ideally. The advanced controls allow this value to be modified, as too high a value and the eggs are washed out of the image and subsequent analysis, but too low a value and paper shadows and creases are erroneously included in the analysis.

2b. Erode and Dilate

These two steps work in conjunction to fine-tune and simplify the image. First is to “erode” the image by removing “noise”, that is, to remove pixels that are too small to be objects and shouldn’t be counted in the analysis. Then the image is slightly “dilated” to sharpen the remaining objects to be fully counted (and without internal holes, which cause other problems down the road).

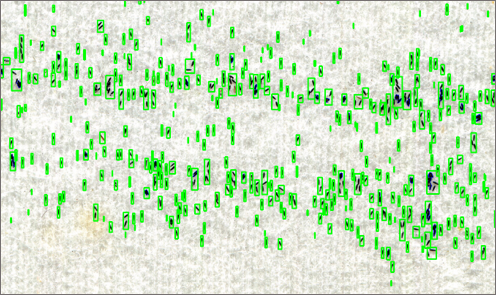

3. Object Detection

Now the analysis runs a “watershed detection” algorithm (where the green boxes are created) to identify the location, size, and boundaries of detected regions of interest (ROIs) by their contours (from their so-called “blob centroids”). If you read more about OpenCV, you’ll also learn about Canny Edge Detection, which was tried for this app, but did not yield as accurate results.

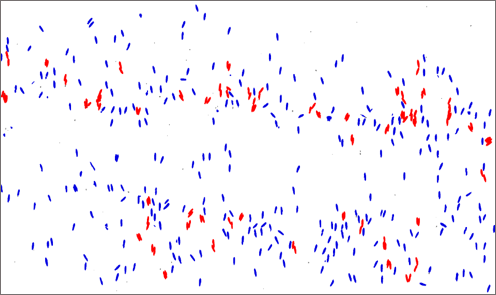

4. Object Sort

Now, through custom calculations within the app, the objects are run through a serious of simple calculations to ascertain the sizes of the various objects — determining the total number of objects detected, the likely size of the median egg, the sizes of egg clusters, etc. As the calculations are performed, the various assigned groupings are assigned a color which then translates into the image of the app (default values are blue for single eggs, red for clusters, green for too small, etc.)

4b. Image Overlay

Finally, the sorted images are overlaid on the original color image, which makes for easier comparison (through a button in the app) that allows for the user to determine that the analysis was successful, and whether or not the advanced controls need to be adjusted to refine the image analysis.

Technical Notes

Recognizing eggs

One challenge that we found during research and testing is that things that might look like eggs in the image are mistaken for them, including clumps of dirt, other organic matter, and even shadows, folds, and imperfections of the egg paper. It should be noted that this is also difficult for human technicians to decipher as well!

Single eggs versus egg clusters

Mosquito eggs have a tendency sometimes to cluster up into groups, sometimes lying on top of each other in ways that are difficult to see and count. The app deals with this is by separating out “single” eggs from egg “clusters.” It does this after identifying all of the ROIs in the image, and calculating their various size dimensions (pixel square area, in this case).

There is an assumption that there will be more “single” eggs than clusters, and so it calculates the average single-egg size from using the median value for all of the ROIs. Then, using this single-egg size approximate value, it divides up the remaining clusters by this value. There are some accuracy challenges with this, but without moving to a more sophisticated model, this works pretty well in practice.

Performance

Having a web app pick out black dots on a white background and count up the values is one thing — but it’s quite another to say that this translates into real-world precision and time-savings. Could it really compete against trained technicians?

Precision

The first question I always get is the extent to which the app reading is “accurate” — which is a tougher question than you might think. Due to the nature of this work, it’s difficult for human counters to be accurate. For example, t’s not always clear whether you’re counting an egg or dirt or two eggs or half of an egg — certain estimates have to be made, and those estimates are compounded when you multiply them by 800 eggs per sheet, and then many sheets in a given day.

In fact, the technicians will often randomly select sheets to have others count them, just to make sure they’re all getting reasonably similar numbers. They won’t be exact between technicians or between counts, but they should be close.

For our app then, it’s less important to talk about a mythical accuracy comparing to an unknown value, and better to talk about precision with respect to consistency and proximity to human evaluators, and hope that our algorithm performs in the same range.

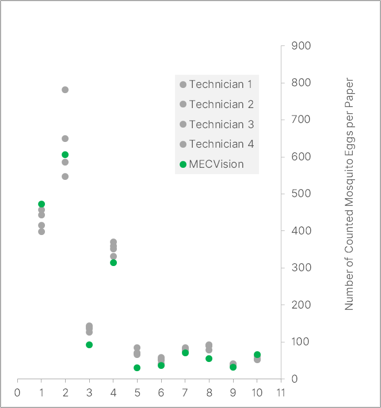

For our first effort, we did just that experiment of having multiple technicians count the same paper, record the results (and time), and then compared that with the MECVision results for the same paper.

Early precision comparison testing of egg counting tasks of MECVision counting compared to technicians.

Early precision comparison testing of egg counting tasks of MECVision counting compared to technicians.

In this preliminary result, we landed pretty close to where we’d want to be — hoping for our app to perform within the grouping of human counts. Some of them are a little low, some a little high, but overall you can see it’s within reason.

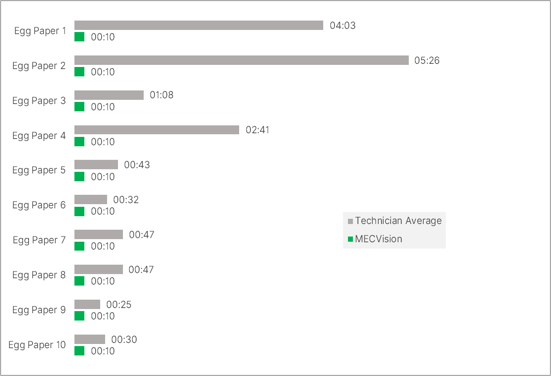

Time

Then it was on to timing the counts, because we wanted to see how much time we could actually save the technicians by having them use this app.

In the same study, we had technicians review 10 papers, which totaled to around 20 minutes. We then compared with MECVision, and found that the same process (with the same performance from the precision exercise above) took around 2 minutes.

Early timed comparison testing of egg counting tasks of MECVision counting compared to technicians.

Early timed comparison testing of egg counting tasks of MECVision counting compared to technicians.

Keep in mind for this too, that’s it’s not zero time for the app — a user still has to take the picture, upload it, adjust it, process, and review. It’s fast, but not instantaneous.

That said, we are obviously very pleased with this result — it’s very promising even with this early study!

A Note on the Risks of Automation

One of the issues we wanted to really ensure that we understood was how this app approach and automated digital solution would impact the routines or even livelihoods of the technical staff that were currently doing this work. It was an explicit issue I would bring up with different program managers, field staff, and partners.

We learned that in the grand scheme of entomological surveillance, and monitoring mosquito populations, this task is actually a relatively small part of the job, maybe spending just a few hours a week on the task. And because of the type of work it is, which requires sustained attention and eye-fatiguing counting — I didn’t talk to anyone who said that they would miss this task. In fact, by freeing up the time that the technicians would otherwise be spending on counting, they could actually get more work done, expand the number of traps to set and collect, complete administrative tasks, and more.

As we develop this further, there is a lesson here about the type of automation that we’d want to achieve, and this particular solution is almost the ideal case for it where we are automating away a mundane, repetitive task of someone’s job where their efficiency will increase as they can take on more tasks of a different variety.

Summary

We created an accessible, open-source web application, MECVision, that can be used to conduct fast and accurate egg counts of certain mosquito eggs. The application can be used with no internet connectivity, in any type of smart phone, iOS or Android or even desktop web browser environments. Additionally, the app can be tailored to meet specific conditions and operating environments. Also, the project was executed on time and under budget (I mean I have to throw that in there.)

It’s not just about counting mosquito eggs – it’s about helping global health teams to solve a problem that they’ve been wanting to solve, but not having the tools in place to do so. Any good technology is simply an extension of an original intent to solve a problem in the real world

Next Steps

The app has been shared with several countries in the Americas and Caribbean, and looks like it will have continued use and investment even after the Zika program closed in 2019, which is a feather in the cap for the app as a signal of USAID’s mantra of Journey to Self-Reliance.

Further, we’ve received interest from folks at a couple of different government agencies that we are exploring as well, as this underlying approach could be useful in a number of related areas.

Future Approaches

Moving beyond straightforward object detection, we are exploring going that next step with trained data models on specific use cases of mosquito eggs — capturing enough imagery data alongside labeled datasets to build even more reliable, accurate, and flexible applications.

Read More…

- Lightning Talk: Innovating Mosquito Egg Counting for Disease Prevention - YouTube, SID-W Annual Conference, Washington, DC, Lightning Talk, 2019.

- Using Computer Vision to Count Aedes aegypti Eggs with a Smartphone — Conference poster research.

-

Counting Mosquito Eggs with a Smartphone – Part 2: The Process for Designing an Automated Egg Counter - Abt Associates — Abt Associates Blog Post, 2019.

-

Counting Mosquito Eggs with a Smartphone – Part 1: The Challenge - Abt Associates — Abt Associates Blog Post, 2019.

Acknowledgments

A lot of people came together to help develop this app and the underlying project.

- Paula Wood

- Kate Stillman

- Jean Margaritis

- Ximena Zepeda McCollum

- Alec McClean

- Justin Stein

- Kunaba Oga

- Ben Holland