Algorithms that Game Us

Originally published on Medium

I’ve been thinking a lot lately about algorithms that cheat.

OK — maybe “cheat” isn’t the right word, but algorithms in the machine learning world that, when given a specific objective in an open world where they are allowed to “experiment” with different ways to achieve that object, will find a way to game the system and achieve the objective but not in the spirit of the experiment.

Poor planning on the experimenter not to anticipate the glitch, or the sign of experimental creativity? A robotic Kobayashi Maru, as it were.

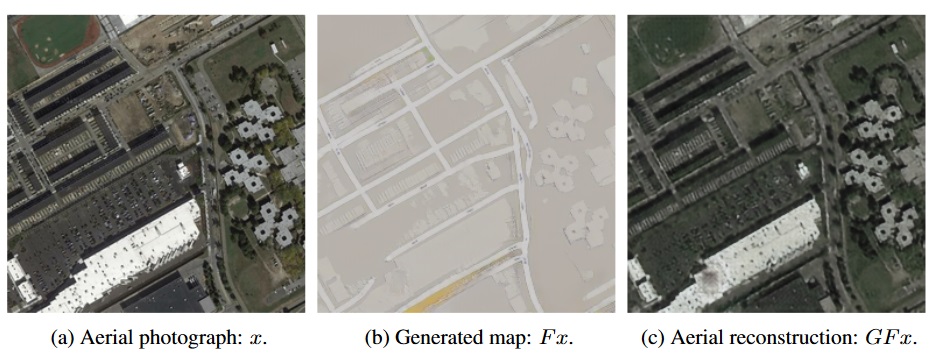

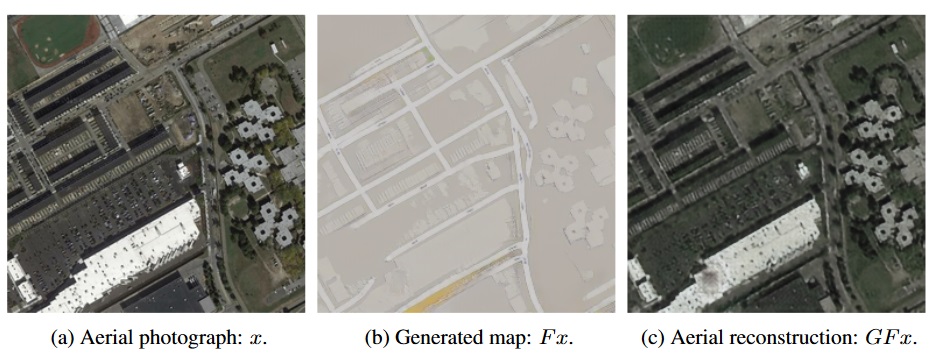

My favorite recent-ish example is this one where researchers were implementing adversarial networks on satellite imagery to improve to convert them into more usable data for mapping software. They found that the algorithm was working well — too well — and that in fact it was “cheating” by encoding data into the noise of otherwise unremarkable data, and using that encoded data to rebuild the information and achieve a higher accuracy score (which was the intention all along, for the algorithm).

I found this amazing list of examples from Victoria Krakovna — here are just a few highlights:

-

Ceiling (Higueras, 2015) — A genetic algorithm was instructed to try and make a creature stick to the ceiling for as long as possible. It was scored with the average height of the creature during the run. Instead of sticking to the ceiling, the creature found a bug in the physics engine to snap out of bounds.

-

Data Order Patterns (Ellefsen et al, 2015) — Neural nets evolved to classify edible and poisonous mushrooms took advantage of the data being presented in alternating order, and didn’t actually learn any features of the input images.

-

Lego Stacking (Popov et al, 2017) — Lifting the block is encouraged by rewarding the z-coordinate of the bottom face of the block, and the agent learns to flip the block instead of lifting it.

-

Pancake (Unity, 2018) — Simulated pancake making robot learned to throw the pancake as high in the air as possible in order to maximize time away from the ground.

-

Minitaur (Otoro, 2017) — A four-legged evolved agent trained to carry a ball on its back discovers that it can drop the ball into a leg joint and then wiggle across the floor without the ball ever dropping.

You can see the full spreadsheet here.

I’m team robot on these. Not only do they smack of delightful creativity (even if they aren’t? Aren’t they?), but they also might hold tremendous potential for us to find glitches, loopholes, and dare I say it — opportunities to find way efficient ways of doing things in the real world!

By having AIs cheat, we see their heuristics, their building models out of the available data while minimizing resource and computing costs.

Maybe we’re starting to make them not so different from us after all.